UNIT - I

Introduction

An operating system is software that manages the computer hard-ware. The hardware must provide appropriate mechanisms to ensure the correct operation of the computer system and to prevent programs from interfering with the proper operation of the system.

- Process Creation:

- Process Scheduling:

- Process Termination:

- Inter-Process Communication (IPC):

- Memory Allocation:

- Memory Protection:

- Memory Mapping:

- Virtual Memory:

- File Creation/Deletion:

- File Reading/Writing:

- Directory Management:

- File Permissions:

- Device Drivers:

- I/O Operations:

- Plug and Play:

- Interrupt Handling:

- Multiuser: Modern operating systems allow multiple users to access and use the system simultaneously.

- Multitasking: Operating systems manage multiple tasks or processes concurrently, allowing users to switch between applications seamlessly.

- Security: OS implements security mechanisms to protect data and resources from unauthorized access.

- Memory Management: Modern OS efficiently manages memory to allocate and release memory space for processes and applications.

- Virtualization: OS provides virtual resources, such as virtual memory and virtual CPUs, to abstract and manage physical resources.

- Networking: Operating systems facilitate communication between computers over networks.

- User Interface: Modern OS offers graphical user interfaces (GUIs) that make it easier for users to interact with the system and applications.

- Resource Management: OS manages hardware resources, such as CPU time, memory, and devices, to ensure fair and efficient utilization.

Structure of Operating System:

The structure refers to the organization and components of an operating system. This includes the kernel, file system, device drivers, and user interface layers. The kernel is the core that manages hardware resources and provides essential services to applications.

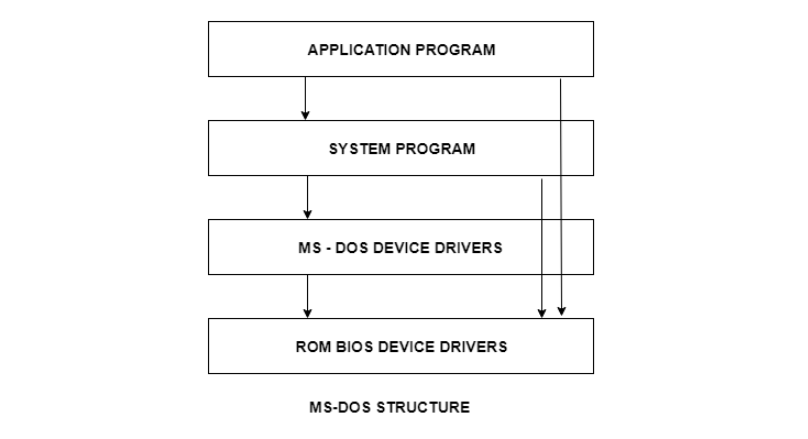

Simple Structure:

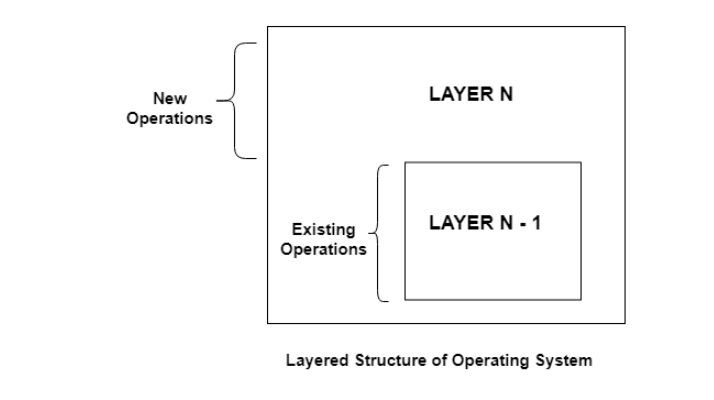

One way to achieve modularity in the operating system is the layered approach. In this, the bottom layer is the hardware and the topmost layer is the user interface. An image demonstrating the layered approach is as follows −

Layered Structure:

There are many operating systems that have a rather simple structure. These started as small systems and rapidly expanded much further than their scope. A common example of this is MS-DOS. It was designed simply for a niche amount for people. There was no indication that it would become so popular. An image to illustrate the structure of MS-DOS is as follows −

Operating System Functions:

Operating systems perform various functions, including process management, memory management, file system management, and device management. Process management involves creating, scheduling, and terminating processes. Memory management ensures efficient memory allocation and deallocation. File system management handles storage and retrieval of files. Device management manages communication with hardware devices.

Certainly! Here are more detailed points explaining each of the operating system functions:

Process Management:

The OS enables the creation of new processes, allowing programs to run concurrently.

It decides which process gets access to the CPU and for how long, ensuring fair and efficient utilization of resources.

The OS manages the orderly termination of processes, releasing resources they were using.

It facilitates communication and data sharing between different processes, aiding in collaborative tasks.

Memory Management:

The OS assigns memory blocks to processes and ensures each process gets the memory it requires.

It prevents processes from accessing memory locations they aren't authorized to, enhancing system stability and security.

The OS facilitates memory mapping, allowing files to be loaded into memory for faster access by processes.

It enables processes to use more memory than is physically available by swapping data between RAM and disk storage.

File System Management:

The OS provides interfaces for creating and deleting files, managing their metadata.

It enables applications to read from and write to files, ensuring data integrity and proper synchronization.

The OS organizes files into directories and subdirectories, helping users locate and manage their files effectively.

It enforces access control by defining who can read, write,or execute certain files,ensuring data security.

Device Management:

The OS utilizes device drivers to interface with hardware devices, translating higher-level commands into device-specific instructions.

It manages input/output operations between applications and devices, ensuring data transfer and synchronization.

The OS supports the automatic detection and configuration of newly connected hardware devices.

It manages interrupts generated by hardware devices, ensuring timely response and efficient utilization of system resources.

In summary,

the operating system performs a multitude of functions that are vital for managing resources, enabling communication, ensuring security, and providing a user-friendly interface within a computer system.

Characteristics of Modern OS:

Modern operating systems possess several characteristics:

Understanding these aspects of operating systems is crucial for comprehending how they function and interact with software applications.

Process Management

Dive into the world of processes in operating systems. Understand the different states a process can be in, learn how processes are created and terminated, and explore the operations that can be performed on processes. Additionally, explore concepts related to concurrent processes, threads, and microkernels.

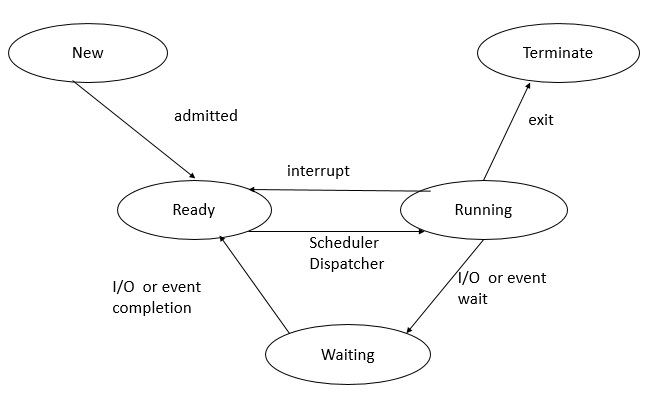

States of a Process:

- New: The process is being created but not yet ready to execute.

- Ready: The process is waiting to be assigned to a processor for execution.

- Running: The process is currently being executed on a CPU.

- Blocked (Waiting): The process is unable to execute because it's waiting for an event like I/O completion or a resource to become available.

- Terminated (Exit): The process has finished executing.

Processes vs Threads

| Processes | Threads | |

|---|---|---|

| Definition | An independent program in execution, with its own memory space and resources. | A smaller unit of a process, sharing memory space and resources within the same process. |

| Resource Overhead | Higher overhead due to separate memory space and resources for each process. | Lower overhead as threads within a process share resources. |

| Communication | More complex and slower inter-process communication (IPC) mechanisms required. | Easier communication via shared memory. |

| Isolation | Processes are isolated, preventing direct impact on others. | Threads share memory, so a bug in one thread can affect others. |

| Creation Time | Creating a new process is more time-consuming. | Creating a new thread is generally quicker. |

| Switching Overhead | Switching between processes requires more overhead. | Thread switching within a process is quicker and requires less overhead. |

| Failure Isolation | Process failures don't affect other processes. | A failure in one thread can affect the entire process. |

| Scalability | May not fully utilize multi-core processors for scalability. | Can lead to better scalability on multi-core processors. |

| Parallelism | Offers true parallelism as processes run independently. | May not provide true parallelism within a process. |

CPU Scheduling

CPU scheduling is a crucial aspect of an operating system that determines how the central processing unit (CPU) handles multiple processes concurrently. Different processes compete for CPU time, and the goal is to optimize resource utilization and response time.

There are several types of CPU scheduling algorithms:

- First-Come, First-Served (FCFS):

- Shortest Job Next (SJN) / Shortest Job First (SJF)

- Priority Scheduling:

- Round Robin (RR):

- Multilevel Queue Scheduling:

- Multilevel Feedback Queue Scheduling:

1. Processes are executed in the order they arrive in the ready queue.

2. The first process in the queue gets the CPU until it completes.

3. FCFS is non-preemptive, meaning a process runs until it finishes or blocks.

4. Can result in a "convoy effect" where short processes wait behind long ones.

Below is the list table of process as per burst time:

| Process | Burst Time |

|---|---|

| P1 | 24 |

| P2 | 3 |

| P3 | 4 |

0

24

27

31

Individual Waiting Time: P1: 0, P2: 24, P3: 27

Individual Turn Around Time: P1: 24, P2: 27, P3: 31

Average Waiting Time: (0 + 24 + 27) / 3 = 17

Average Turn Around Time: (24 + 27 + 31) / 3 = 27.33

The process with the smallest execution time is given priority. This minimizes average waiting time but requires knowledge of process durations, which might not be available.

1. Selects the process with the shortest burst time first.

2. Non-preemptive SJF allows a process to run until completion before selecting the next.

3. Preemptive SJF allows a shorter job to interrupt and run before a longer one.

4. Reduces waiting times for shorter jobs, aiming for optimal completion times.

Non-Preemptive SJF

0 - 3

3 - 7

7 - 31

Individual Waiting Time: P1: 3, P2: 0, P3: 3

Individual Turn Around Time: P1: 31, P2: 3, P3: 7

Average Waiting Time: (3 + 0 + 3) / 3 = 2

Average Turn Around Time: (31 + 3 + 7) / 3 = 13.67

Preemptive SJF

0 - 3

12 - 20

3 - 7

7 - 15

20 - 31

Individual Waiting Time: P1: 1, P2: 0, P3: 3

Individual Turn Around Time: P1: 31, P2: 20, P3: 7

Average Waiting Time: (1 + 0 + 3) / 3 = 1.33

Average Turn Around Time: (31 + 20 + 7) / 3 = 19.33

Each process is assigned a priority, and the CPU executes the highest priority process first. It can be preemptive (priorities can change dynamically) or non-preemptive (once a process starts, it continues until completion).

Each process gets a fixed time slice (quantum) to execute. If the process doesn't complete in its time slice, it's moved to the back of the queue. It's fair and prevents long processes from hogging the CPU.

1. Divides CPU time into fixed time slices (time quantum).

2. Processes are arranged in a circular queue.

3. Preemption occurs if a process's burst time exceeds the time quantum, promoting fairness.

4. Context switch happens at the start/end of each time quantum to transition between processes.

0 - 4

12 - 16

20 - 24

4 - 8

16 - 20

8 - 12

24 - 27

27 - 30

Individual Waiting Time: P1: 4, P2: 8, P3: 12, P4: 27

Individual Turn Around Time: P1: 16, P2: 20, P3: 27, P4: 30

Average Waiting Time: (4 + 8 + 12 + 27) / 4 = 12.75

Average Turn Around Time: (16 + 20 + 27 + 30) / 4 = 23.25

Processes are divided into different queues based on priority. Each queue has its own scheduling algorithm. Processes move between queues based on their behavior and priority.

Similar to multilevel queues, but processes can move between queues based on their behavior and recent CPU usage. This allows processes to move to a more appropriate priority queue.

These algorithms aim to balance the trade-offs between throughput, response time, fairness, and system efficiency based on the specific needs of the computing environment. Different scenarios may require different scheduling strategies.